#ArtificialEmotionalIntelligence; #SociallyAssistiveRobotsl # SeniorCommunities

CMEDIA: Vulnerable or older users perceive Socially assistive robots (SARS) as more engaging, and helpful in helping them to complete everyday activities. In addition to increasing their independence, these robots could stimulate users mentally and offer basic emotional support, https://techxplore.com/news reported on Feb 23.

SARS is a class of robotic systems specifically designed to help seniors in their daily lives. To support users most effectively, however, these robots should be able to engage in meaningful social interactions, identifying the emotions of users and responding appropriately to them. This could ultimately increase the users’ trust in the robots, while also promoting their emotional wellbeing.

A small pilot study was recently carried out by the researchers at University of Denver, DreamFace Technologies, and University of Colorado with an aim to explore the ways the perceptions of older adults change by using socially assistive robots. These researchers discovered that a change in perception of the older adults depends on if these robots have an artificial emotional intelligence or not. Their findings, published in IEEE Transactions on Affective Computing, suggest that senior adults tend to perceive robots programmed to behave more empathically as more engaging and likable.

10 older adults living at Eaton Senior Communities, an independent senior care facility in Lakewood, Colorado were selected by the researchers for their study. The participants were asked to share their feedback and perceptions after their interaction with a socially assistive robot created by DreamFace Technologies called Ryan. The researchers used two different versions of the robot, one exhibiting empathic behavior and the other having no responses to a user’s emotions.

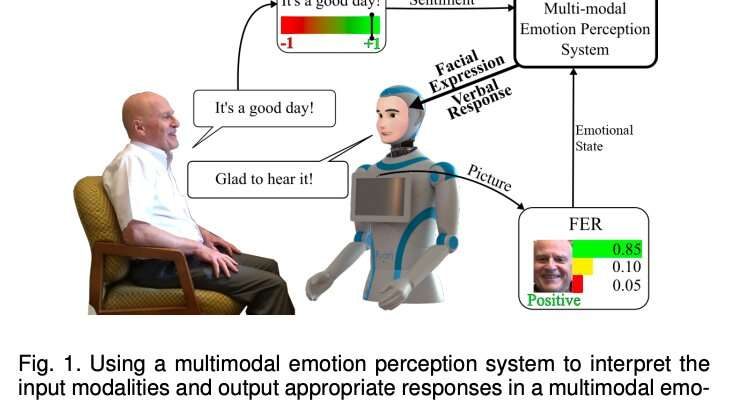

“The empathic Ryan utilizes a multimodal emotion recognition algorithm and a multimodal emotion expression system,” Hojjat Abdollahi and his colleagues explained in their paper. “Using different input modalities for emotion (i.e., facial expression and speech sentiment), the empathic Ryan detects users’ emotional state and utilizes an affective dialog manager to generate a response. On the other hand, the non-empathic Ryan lacks facial expression and uses scripted dialogs that do not factor in the users’ emotional state.”

The 10 senior participants of this study were asked to interact with Ryan twice a week and for 15 minutes, over three consecutive weeks. The participants were randomly assigned to two groups. While all participants interacted with both versions of Ryan, the order in which they interacted with them varied.

Users were asked to rate their mood on a scale of 0 to 10 both before and after they interacted with Ryan. In addition, the researchers interviewed individual participants and asked them to complete a survey after completing the study.

After the analysis of the collected data by the researchers, Abdollahi and his colleagues found that the users had benefitted from interacting with both the empathic and non-empathic social robots. Nonetheless, the feedback shared in exit surveys suggests that users perceived the empathic Ryan as more engaging and likable.

In the future, the findings gathered by this team of researchers could be used as a criterion for future new studies with bigger samples of participants which could further assess the effects of artificial emotional intelligence on how users perceive social robots. In addition, their work could be an inspiration to more roboticists to develop techniques that enhance the socially assistive robots’ ability to gauge human emotions and adapt their facial expressions or interaction style accordingly.