CMEDIA: While robots have become increasingly advanced over the past few years, most of them are still unable to reliably navigate very crowded spaces, such as public areas or roads in urban environments, https://techxplore.com/news repoted.

To be implemented on a large-scale and in the smart cities of the future, however, robots will need to be able to navigate these environments both reliably and safely, without colliding with humans or nearby objects.

Researchers at the University of Zaragoza and the Aragon Institute of Engineering Research in Spain have recently proposed a new machine learning–based approach that could improve robot navigation in both indoor and outdoor crowded environments. This approach, introduced in a paper pre-published on the arXiv server, entails the use of intrinsic rewards, which are essentially “rewards” that an AI agent receives when performing behaviors that are not strictly related to the task it is trying to complete.

“Autonomous robot navigation is an open unsolved problem, especially in unstructured and dynamic environments, where a robot has to avoid collisions with dynamic obstacles and reach the goal,” Diego Martinez Baselga, one of the researchers who carried out the study, told Tech Xplore. “Deep reinforcement learning algorithms have proven to have a high performance in terms of success rate and time to reach the goal, but there is still a lot to improve.”

The method introduced by Martinez Baselga and his colleagues uses intrinsic rewards, rewards designed to increase an agent’s motivation to explore new “states” (i.e., interactions with its environment) or to reduce the level of uncertainty in a given scenario so that agents can better predict the consequences of their actions. In the context of their study, the researchers specifically used these rewards to encourage robots to visit unknown areas in its environment and explore its environment in different ways, so that it can learn to navigate it more effectively over time.

“Most of the works of deep reinforcement learning for crowd navigation of the state-of-the-art focus on improving the networks and the processing of what the robot senses,” Martinez Baselga said. “My approach studies how to explore the environment during training to improve the learning process. In training, instead of trying random actions or the optimal ones, the robot tries to do what it thinks it may learn more from.

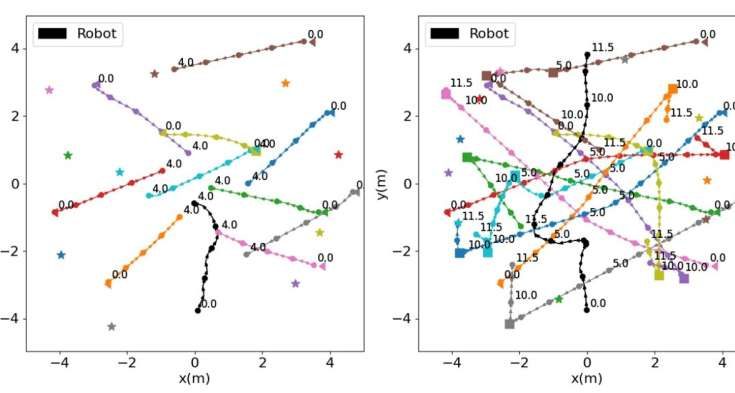

Martinez Baselga and his colleagues evaluated the potential of using intrinsic rewards to tackle robot navigation in crowded spaces using two distinct approaches. The first of these integrates a so-called “intrinsic curiosity module” (ICM), while the second is based on a series of algorithms known as random encoders for efficient exploration (RE3).

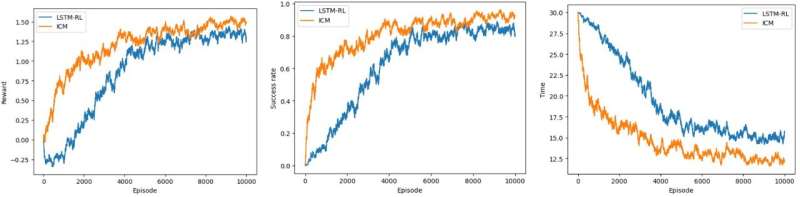

The researchers evaluated these models in a series of simulations, which were ran on the CrowdNav simulator. They found that both of their proposed approaches integrating intrinsic rewards outperformed previously developed state-of-the-art methods for robot navigation in crowded spaces.

In the future, this study could encourage other roboticists to use intrinsic rewards when training their robots, to improve their ability to tackle unforeseen circumstances and safely move in highly dynamic environments. In addition, the two intrinsic rewards-based models tested by Martinez Baselga and his colleagues could soon be integrated and tested in real-robots, to further validate their potential.

“The results show that applying these smart exploration strategies, the robot learns faster and the final policy learned is better; and that they could be applied in top of existing algorithms to improve them,” Martinez Baselga added. “In my next studies, I plan to improve deep reinforcement learning in robot navigation to make it safer and more reliable, which is very important in order to use it in the real world.”

#robotnavigation; # CrowdNavsimulator